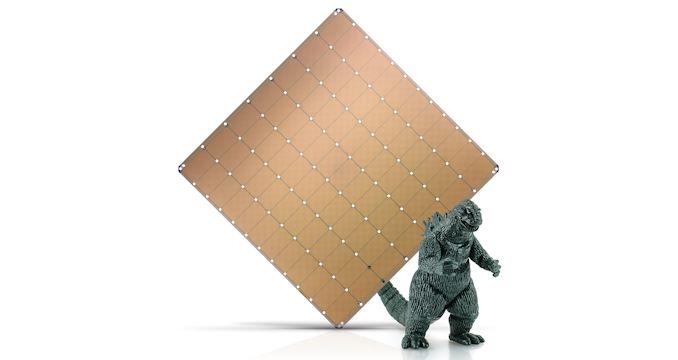

cerebras 2.6t 250m series 4b

Unleashing Unprecedented Power

The Cerebras 2.6T 250M Series 4B is a true game-changer in the world of AI computing. With its massive size and impressive specifications, it offers unparalleled power and performance. The chip boasts a staggering 2.6 trillion transistors, making it the largest chip ever built. This immense transistor count enables the chip to handle complex AI workloads with ease, delivering lightning-fast processing speeds and exceptional accuracy.

One of the key features that sets the Cerebras 2.6T 250M Series 4B apart is its massive on-chip memory capacity. With a whopping 250 million individual memory units, this chip eliminates the need for external memory access, significantly reducing latency and improving overall performance. This means that AI models can be trained and executed directly on the chip, eliminating the bottleneck caused by data transfer between the processor and external memory.

Efficiency Redefined

Despite its enormous size and power, the Cerebras 2.6T 250M Series 4B is surprisingly energy-efficient. Traditional AI chips often consume substantial amounts of power, limiting their scalability and practicality. However, Cerebras has overcome this challenge by incorporating advanced power management techniques into their design.

The chip utilizes a sophisticated power delivery system that ensures optimal power distribution across its vast array of transistors. By dynamically adjusting power allocation based on workload requirements, the Cerebras 2.6T 250M Series 4B achieves remarkable energy efficiency without compromising performance. This breakthrough in power management opens up new possibilities for AI applications, enabling more complex models to be trained and executed within reasonable power constraints.

Unparalleled Scalability

Scalability is a critical factor in AI computing, as it determines the ability to handle increasingly complex models and datasets. The Cerebras 2.6T 250M Series 4B excels in this regard, offering unmatched scalability for AI workloads. Its massive size and memory capacity allow for the parallel execution of multiple AI models simultaneously, enabling faster training and inference times.

Furthermore, the chip’s architecture is designed to support seamless integration with existing AI frameworks and software libraries. This ensures compatibility with popular deep learning frameworks such as TensorFlow and PyTorch, making it easier for developers to leverage the chip’s capabilities without significant modifications to their existing codebase. The Cerebras 2.6T 250M Series 4B empowers researchers and developers to tackle more ambitious AI projects, pushing the boundaries of what is possible in the field.

Unlocking New Possibilities

The Cerebras 2.6T 250M Series 4B has the potential to revolutionize various industries that heavily rely on AI technologies. From healthcare and finance to autonomous vehicles and natural language processing, this chip opens up new frontiers for innovation and discovery. Its unprecedented power, efficiency, and scalability enable researchers and developers to tackle complex problems that were previously deemed infeasible.

In healthcare, for example, the Cerebras 2.6T 250M Series 4B can accelerate medical imaging analysis, enabling faster and more accurate diagnoses. In the financial sector, it can facilitate more sophisticated risk analysis and fraud detection, enhancing security and efficiency. The possibilities are endless, and the Cerebras 2.6T 250M Series 4B is at the forefront of this AI revolution.

Conclusion:

The Cerebras 2.6T 250M Series 4B represents a significant milestone in AI computing. With its massive size, exceptional power, energy efficiency, and scalability, it has the potential to reshape the landscape of AI research and development. As this chip becomes more accessible to researchers and developers, we can expect groundbreaking advancements in various industries, unlocking new possibilities and driving innovation forward. The Cerebras 2.6T 250M Series 4B is undoubtedly a game-changer that will shape the future of AI computing.